With decades of experience navigating the complexities of the global supply chain, Rohit Laila has developed a keen eye for technologies poised to revolutionize logistics and beyond. His insights bridge the gap between industrial necessity and technological possibility. In this conversation, we explore the groundbreaking acquisition of humanoid robotics firm Mentee Robotics by automotive AI giant Mobileye, a deal announced at CES 2026. We’ll delve into the staggering valuation jump that caught the industry’s attention, the technical prowess behind Mentee’s autonomous robots, and the strategic vision for a future defined by “physical AI,” where intelligent machines move seamlessly through our world.

Mentee Robotics achieved an astonishing leap in valuation, from $162 million to a $900 million acquisition price in less than a year. From your perspective, what key achievements or market realignments could possibly justify such explosive growth, and what does it tell us about the current investment climate for AI robotics?

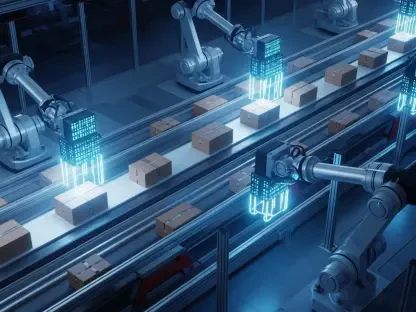

What we’re seeing is the market placing an immense premium on tangible progress. It’s not just about simulations anymore. In March 2025, Mentee secured a $21 million funding round that valued them at $162 million. But in the months that followed, they demonstrated real-world capabilities that investors are starved for. Being recognized by a major player like NVIDIA for their work in simulation was a significant validator, but the real catalyst was their November 2025 video. Seeing two humanoids autonomously move 32 boxes without any human intervention—that’s not a theoretical breakthrough; it’s a practical demonstration of commercial viability. This signals that the investment landscape is shifting from funding pure research to aggressively backing companies that can prove their robots are ready to leave the lab and enter the real world.

This deal is being framed as the creation of a leader in “physical AI,” merging Mobileye’s automotive AI with Mentee’s humanoid platform. Can you break down how Mobileye’s specific background in self-driving cars will concretely benefit the Menteebot, and what early applications might we see from this synergy?

This is the most exciting part of the acquisition. Mobileye has spent years mastering the art of creating robust, automotive-grade AI that can perceive and navigate the chaotic, unpredictable real world. They have an unparalleled AI infrastructure and, crucially, the experience of commercializing this technology on a global scale. For Mentee, this means they can leapfrog the grueling process of productizing their platform. Instead of spending years figuring out supply chains and manufacturing for a scalable, safe, and cost-effective robot, they can tap directly into Mobileye’s expertise. The first applications will almost certainly be in environments that mirror the challenges of their demonstration—logistics centers, warehouses, and light manufacturing, where tasks like moving goods from one place to another are constant. Mobileye’s AI will make the Menteebots exceptionally good at navigating these complex, dynamic spaces safely and efficiently.

That 2025 demonstration of two Menteebots moving 32 boxes is a significant milestone. Could you walk us through the layers of technology that enable a robot to perform such a task with full autonomy, and why is the lack of teleoperation so critical for the industry?

The magic here is in the “end-to-end” autonomy. First, the robot engages in advanced scene understanding. It’s not just seeing boxes; it’s identifying their location, the configuration of the eight piles, and the specific dimensions and available space on the storage racks. Then, it autonomously plans the entire sequence of actions without a human drawing out paths or pre-programming motions. Finally, it executes that plan, which involves reliable locomotion to navigate the space, precise navigation to approach the boxes and racks, and safe manipulation to grasp and place the objects without dropping them. The absence of teleoperation is the game-changer. It means the robot is making its own decisions. This is what separates a useful tool from a remote-controlled puppet and is the only way to achieve true operational scale. You can’t hire an army of operators to control an army of robots; the robots must think for themselves.

Mobileye’s CEO referred to this as the dawn of “Mobileye 3.0.” How do you interpret that statement? What does this move into humanoid robotics signify for the company’s core automotive business and its broader identity moving forward?

“Mobileye 3.0” is a declaration that the company is evolving beyond the car. For years, they have been a leader in creating the “eyes” and “brain” for autonomous vehicles. Now, they’re building the “body.” This acquisition transforms their identity from a premier automotive technology supplier into a foundational physical AI company. The integration means their long-term roadmap is no longer just about advancing self-driving levels in cars. It’s about deploying intelligent, mobile agents into every corner of the physical world. The core automotive business will benefit immensely; the challenges of teaching a humanoid to manipulate objects will provide invaluable data and AI advancements that can feed back into making their vehicle systems even smarter and more capable of understanding complex human environments.

The ability for Menteebots to follow natural instructions, as shown in late 2025, seems to be a core part of their platform. Could you give us a glimpse into the kind of AI that powers this and perhaps an example of how a simple command translates into a complex series of robotic actions?

This capability is what makes the technology truly accessible. Behind the scenes, this is powered by sophisticated AI models, likely a form of large language or vision-language model, that act as a translator between human intent and robotic action. Imagine telling the robot, “Please organize the boxes on those shelves, starting with the heaviest ones on the bottom.” The AI has to first parse that sentence to understand several concepts: “organize,” “boxes,” “shelves,” “heaviest,” and “bottom.” It then uses its vision systems to locate all these elements in the room. Finally, it generates a complex, multi-step plan: assess the weight of each box, plan a path to the first heavy box, pick it up, navigate to the shelves, place it on the bottom rack, and repeat the entire process until the task is complete. It’s the seamless conversion of a simple verbal request into a detailed, autonomous mission plan that is so revolutionary.

What is your forecast for physical AI?

My forecast is one of rapid acceleration and convergence. For the next few years, we are going to see a gold rush to solve the “last meter” problem—not just getting a vehicle to a location, but getting a physical task done at that location. The synergy we see in the Mobileye and Mentee deal, combining specialized, hardened AI from one industry with the general-purpose hardware of another, will become a dominant template. We’ll move from impressive but isolated demos to pilot programs in real-world logistics and manufacturing within the next 24 months. The ultimate goal isn’t just a robot that can perform a task, but a cost-effective, scalable one that can be produced and deployed by the thousands. This acquisition signals that the industrial-scale era of physical AI is officially underway.