With decades of experience spanning logistics, supply chain, and delivery, Rohit Laila brings a unique perspective to the intersection of technology and transportation. His passion for innovation is focused on solving some of the rail industry’s most persistent challenges. Today, we explore how advanced perception systems, combining the all-weather resilience of radar with the intelligence of AI, are pushing the boundaries of what’s possible in rail safety, efficiency, and automation. We’ll discuss how these systems see through dense fog, differentiate real threats from background noise, and even predict track failures before they happen, ultimately creating a safer and more reliable railway for the future.

Rail operators often must reduce speed in adverse weather like dense fog or heavy snow due to the limitations of human and camera vision. How do radar-powered systems provide operational certainty in these conditions, and what tangible impact does this have on maintaining schedules and punctuality?

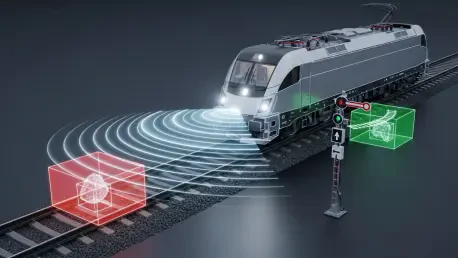

This is the central issue we’re tackling. When visibility drops, operational certainty evaporates. A driver’s vision, or even a standard camera, might be limited to just a few hundred meters in dense fog. Considering a train’s braking distance can be ten times that of a car, this creates a massive safety risk. Radar completely changes this dynamic. Because it uses millimeter-wave frequencies, it can penetrate fog, heavy rain, and snow with minimal performance degradation. It gives the operator a clear picture of the track ahead for over 1,000 meters, regardless of the weather. This provides the confidence to maintain line speed safely, which has a direct and profound impact on punctuality. Instead of a cascading series of delays rippling through the network, trains can stick to their timetables, reducing congestion and ensuring the entire system runs more smoothly.

While radar provides a robust, all-weather sensing backbone, physical AI adds a crucial intelligence layer. Can you provide a step-by-step example of how AI interprets raw radar signals to distinguish a real hazard, like debris on the track, from a false positive like environmental clutter?

Absolutely. Think of raw radar data as a complex, noisy echo of the world. A simple metal signpost near the track might create a strong reflection, just as a piece of fallen debris would. A classical system might flag both as potential obstacles, leading to constant, unnecessary alerts. This is where physical AI comes in. First, the system gathers the raw radar returns from an object. Instead of just seeing a ‘blip,’ the AI analyzes the signature of that blip—its size, shape, texture, and how it reflects the radar waves. Then, it assesses behavioral cues. Is the object stationary? Is it moving in a way that’s characteristic of an animal or a person? By training on thousands of examples, the AI learns that debris has a very different structural and geometric signature compared to, say, trackside equipment or a scattering of rain. It effectively learns to filter out the “environmental clutter” by recognizing it for what it is, allowing it to classify the piece of debris with high confidence as a genuine hazard and issue a timely, actionable alert to the driver.

The transition from automotive to rail presents unique challenges, such as much longer braking distances and different types of track obstructions. Could you discuss the key adaptations required to make automotive-grade radar effective for long-range rail applications, particularly for detecting obstacles over 1,000 meters away?

This is a critical point. While the core radar technology matured in the automotive sector with features like emergency braking, you can’t simply take a car radar and put it on a locomotive. The scale is completely different. A car needs to see a few hundred meters ahead; a train traveling at speed needs to see over a kilometer to have a safe braking margin. The first major adaptation is sheer power and resolution at range. We had to engineer a system that could not only send out a signal and get a return from over 1,200 meters but also have the fidelity to distinguish a small object from track infrastructure at that distance. Secondly, the AI models are trained on an entirely different library of potential hazards. Instead of just cars and pedestrians, the system needs to reliably identify unique rail obstacles like misaligned switches, rockfalls, or large animals on the track. It’s a fundamental re-engineering of the perception stack for a far more demanding operational environment.

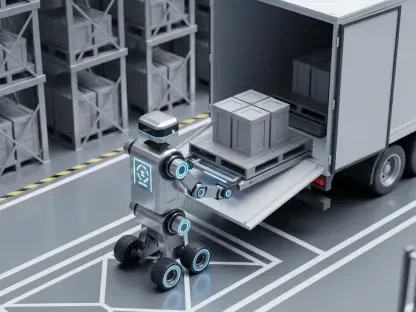

Your system is undergoing trials with Indian Railways in extreme winter fog and is also being used in an autonomous shunting program in Europe. Could you share an anecdote from one of these deployments that illustrates how the technology overcame a specific challenge that traditional methods couldn’t?

The trials on Indian Railways really stand out. They operate in some corridors where winter fog becomes so dense that visibility drops to near zero. Operationally, this is a nightmare, often grinding traffic to a halt. During one of the trials, the crew was operating in these exact conditions, unable to see more than a few carriage lengths ahead. Our system, however, was painting a clear picture of the track for over 1,200 meters on their display. It suddenly flagged a significant, stationary obstacle far down the track. The crew was skeptical at first because they couldn’t see a thing, but the system was persistent. They began to slow the train, and as they got closer, a large animal that had wandered onto the tracks emerged from the fog. Without the system, they would have been traveling blind and a dangerous collision would have been almost certain. It was a powerful real-world demonstration of the technology providing a “digital sixth sense” precisely when human vision had failed.

Beyond hazard detection, you mention using this same sensor stack for predictive maintenance by learning what a “healthy” track looks like. How does the system identify early signs of degradation, like buckling, and how does this translate into more stable and predictable maintenance schedules for infrastructure managers?

This is one of the most exciting secondary benefits. As a train runs its route, the perception system is constantly scanning the track and its immediate surroundings. The AI builds a highly detailed, data-rich baseline model of what that specific stretch of track looks like under normal, “healthy” conditions. It learns the precise geometry of the rails, the position of signals, and the state of the track bed. Over subsequent journeys, it compares the live data to this baseline. If it starts to detect a subtle, progressive change—say, a minute deformation in the rail that could be an early sign of buckling from heat, or a shift in the track ballast—it flags it as an anomaly. For an infrastructure manager, this is revolutionary. Instead of relying on periodic manual inspections or waiting for a failure, they get an early warning about a specific location, allowing for targeted, proactive intervention. This prevents unplanned outages and allows them to create far more stable and efficient maintenance workbanks.

Gaining operator trust is essential for the adoption of any new driver assistance system. What are the most important factors in designing a perception system that drivers find reliable, and how do you ensure the system provides actionable alerts without causing “alarm fatigue”?

Trust is everything. If a driver doesn’t believe in the system, they won’t use it, and its safety benefits are lost. The single most important factor is consistency and the reduction of false positives. A system that constantly cries wolf will be ignored. This is where the sophistication of the AI is paramount. By accurately distinguishing a genuine hazard from environmental noise, we ensure that when an alert does sound, it’s for a credible threat. The second factor is the clarity of the information provided. It’s not enough to just flash a red light; the system needs to tell the operator what it’s seeing, where it is, and how far away it is. This gives them the context needed to make an informed decision. By ensuring alerts are both highly reliable and easily interpretable, we build that essential trust and avoid the “alarm fatigue” that plagues less intelligent systems.

What is your forecast for the adoption of advanced perception systems in rail over the next five to ten years, particularly regarding the move toward higher grades of automation (GoA)?

I believe we’re at a tipping point. Over the next five to ten years, I forecast that these advanced perception systems will become standard equipment, not just a niche technology. Initially, we’ll see widespread adoption in driver assistance systems (GoA 2), where the technology acts as a crucial co-pilot, enhancing safety and operational efficiency, especially on high-density routes. The real game-changer, however, will be its role in enabling higher grades of automation. For GoA 4, or fully unattended operation, you absolutely need a perception backbone that is immune to weather and lighting conditions. You can’t have an autonomous train that has to stop every time it gets foggy. Because radar fused with AI provides that “all-visibility” capability, it is the foundational enabler for making widespread, safe, and reliable autonomous rail a reality. The work we’re doing in autonomous shunting is just the beginning; the mainline is next.