The long-held vision of a single, unified intelligence commanding a diverse army of robots has officially moved from the pages of science fiction into the realm of tangible technology. A UK-based artificial intelligence firm, Humanoid, has introduced KinetIQ, a groundbreaking AI framework engineered for the comprehensive, end-to-end orchestration of heterogeneous humanoid robot fleets. This system represents a significant leap forward, designed to serve as a centralized nervous system for robots operating across a vast spectrum of applications, from sprawling industrial warehouses and busy service sectors to the intricate environments of smart homes. The fundamental objective of KinetIQ is to provide a single, cohesive control architecture capable of commanding robots with vastly different physical forms, or embodiments. By coordinating the complex ballet of interactions between them, the framework aims to unlock the potential for achieving large-scale, collaborative objectives that were previously unattainable, turning a collection of individual machines into a truly synergistic and intelligent workforce.

A New Foundation for Collective Intelligence

At the very core of the KinetIQ framework lies the revolutionary principle of being “cross-embodiment,” which allows a single, centralized AI model to proficiently command robots with fundamentally different physical structures. This means the same intelligence can direct a bipedal robot navigating a cluttered floor and a wheeled platform moving heavy pallets, treating them as specialized components of a larger whole. The most profound advantage of this approach is the creation of a powerful collective intelligence. Data gathered from the operational experiences of one robot, whether successful or not, is immediately integrated into the central model. This new knowledge is then used to train and enhance the performance of every other robot across the entire fleet, regardless of their individual physical differences. A lesson learned by a humanoid robot about gripping a particular object can instantly inform the strategy of a wheeled robot with a different manipulator arm, accelerating the learning curve for the entire system exponentially and fostering an unprecedented level of adaptive capability.

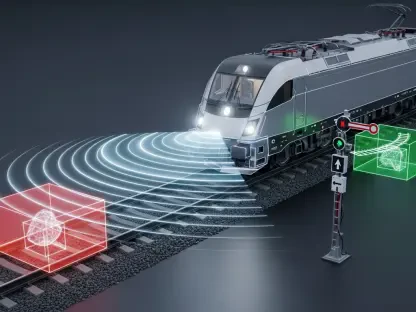

Another foundational pillar of the framework is its “cross-timescale” architecture, which enables the system to operate simultaneously across four distinct cognitive layers. Each layer functions on its own specific timescale, creating a hierarchical structure that mirrors complex biological cognition. At the highest level, fleet-wide strategic decisions are formulated over seconds or even minutes, optimizing the allocation of resources and long-term goals. Descending through the layers, the timescale shortens dramatically, culminating in the lowest level where millisecond-level adjustments to joint control are made to ensure dynamic stability and fluid motion. This design follows an agentic pattern, where each cognitive layer perceives the layer directly beneath it as a collection of available tools. It orchestrates these tools through sophisticated prompting and tool-use paradigms to accomplish the goals assigned by the layer above, ensuring a seamless translation from abstract strategy to precise physical action and enabling the system to scale naturally.

The Strategic Mind of The Machine

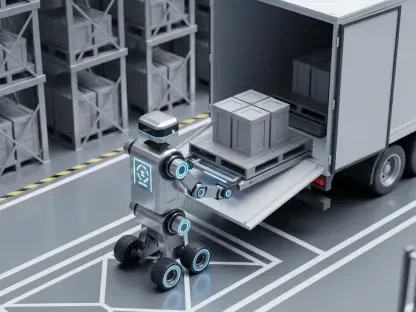

Operating at the highest level of abstraction, System 3 functions as the Humanoid AI Fleet Agent, the master conductor of the entire robotic orchestra. This agentic AI layer perceives each individual robot not as a separate entity to be micromanaged, but as a specialized tool within its extensive repertoire. It makes strategic decisions in a matter of seconds to deploy these tools in a way that optimizes the fleet’s overall operational efficiency and throughput. This system is engineered for seamless integration with existing facility management systems commonly found in modern logistics, retail, and manufacturing sectors, and it is equally adaptable for complex service industry scenarios or for coordinating devices in a smart-home ecosystem. The KinetIQ Agentic Fleet Orchestrator at this level processes a continuous stream of high-level inputs, including new task requests, desired outcomes, detailed Standard Operating Procedures (SOPs), and real-time contextual information about the operational environment, strategically allocating tasks among the available bipedal and wheeled robots to maximize productivity and uptime.

Descending one level in the hierarchy, System 2 functions as the dedicated robot-level reasoning agent, responsible for planning and reasoning about how to physically interact with the world to achieve the specific goals assigned by the fleet commander above. Operating on a timescale that ranges from a single second to just under a minute, this layer employs a sophisticated omni-modal language model to perceive its immediate surroundings through an array of sensors and to accurately interpret the high-level instructions it receives from System 3. Its primary role is to deconstruct broad objectives into a logical sequence of smaller, more manageable sub-tasks. It reasons about the precise physical actions required to complete an assignment, such as the best way to approach an object or the optimal sequence for manipulating it. Crucially, these plans are not rigid, pre-programmed scripts; they are dynamic strategies that are continuously updated based on real-time visual context, allowing the robot to adapt to unexpected changes in its environment with remarkable agility and intelligence.

From Digital Command to Physical Action

System 1 represents the critical bridge between abstract reasoning and physical execution, functioning as a Vision-Language-Action (VLA) neural network that carries out the immediate, low-level objectives established by System 2. This layer translates the sub-tasks into concrete commands by specifying target poses for specific parts of the robot’s body, such as its hands, torso, or pelvis. It exposes multiple low-level capabilities—such as picking up an object, placing it in a container, or locomoting to a new location—which the reasoning layer above can invoke via different prompts. The VLM-based reasoning in System 2 intelligently selects the most appropriate capability for any given situation. To ensure precise progress tracking, each capability within System 1 reports its status back to System 2. The KinetIQ VLA generates new predictions at a sub-second timescale, ensuring responsive action. To handle the asynchronous nature of this process, the framework uses a technique called prefix conditioning, which ensures that each new prediction smoothly and logically follows the last, preventing jerky or contradictory movements.

At the very foundation of this intricate hierarchy, System 0 is singularly focused on the robot’s physical integrity, stability, and control. Its sole purpose is to achieve the precise pose targets dictated by System 1 while simultaneously solving for the state of all the robot’s joints in a way that continuously guarantees dynamic stability. This is the fastest and most reflexive layer in the entire framework, running at a high frequency of 50 Hz to make constant, minute adjustments. KinetIQ’s implementation of System 0 utilizes whole-body controllers that have been meticulously trained using reinforcement learning (RL) for both its bipedal and wheeled robot platforms. This RL-based approach allows the system to leverage the full dynamic potential of each platform’s hardware, resulting in highly capable and robust locomotion controllers that can navigate complex terrains and maintain balance under duress. The intensive training for these controllers was conducted exclusively in simulation, requiring a significant investment of computational resources to develop a proficient model capable of such precise physical control.

A Blueprint for Future Autonomy

The launch of this multi-layered cognitive framework marked a pivotal moment in the advancement of physical AI. By successfully combining high-level fleet orchestration, situational reasoning, dexterous manipulation, and dynamic stability control into a single, cohesive system, a new paradigm for robotic operation was established. This hierarchical approach provided a scalable and adaptable blueprint that solved many of the persistent challenges related to coordinating diverse robotic platforms. The integration of cross-embodiment learning, in particular, represented a fundamental shift, creating a pathway for rapid, fleet-wide skill acquisition that had previously been theoretical. The system’s agentic design, where each layer treated the one below it as a set of tools, demonstrated a remarkably efficient method for translating abstract human intent into precise, real-world robotic action. This development laid the groundwork for future systems where complex, multi-robot tasks in dynamic environments could be managed with unprecedented levels of autonomy and intelligence.